The monthly membership fee for an online television and movie service is $9.80. The cost of viewing television shows online is included in the membership fee, but there is an additional fee of $1.50 to rent each movie online. For one month, Jill’s membership and movie rental fees were $12.80. How many movies did Jill rent online that month?

1

2

3

4

The correct Answer is:B

Let m be the number of movies Jill rented online during the month. Since the monthly membership fee is $9.80 and there is an additional fee of $1.50 to rent each movie online, the total of the membership fee and the movie rental fees, in dollars, can be written as 9.80 + 1.50m. Since the total of these fees for the month was $12.80, the equation 9.80 + 1.50m = 12.80 must be true. Subtracting 9.80 from each side and then dividing each side by 1.50 yields m = 2. Choices A, C, and D are incorrect and may be the result of errors in setting up or solving the equation that represents the context.

Similar Questions

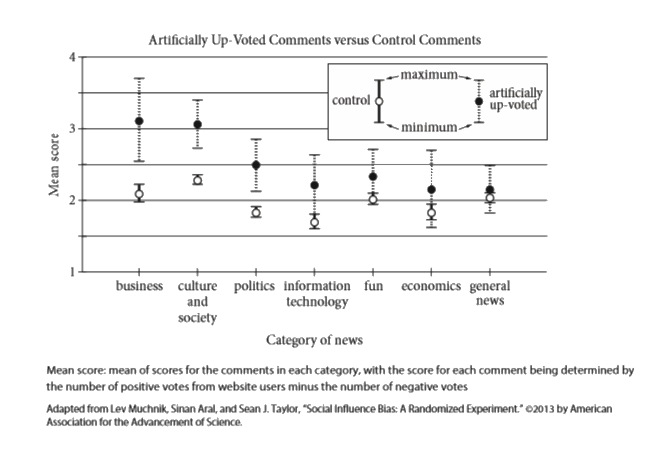

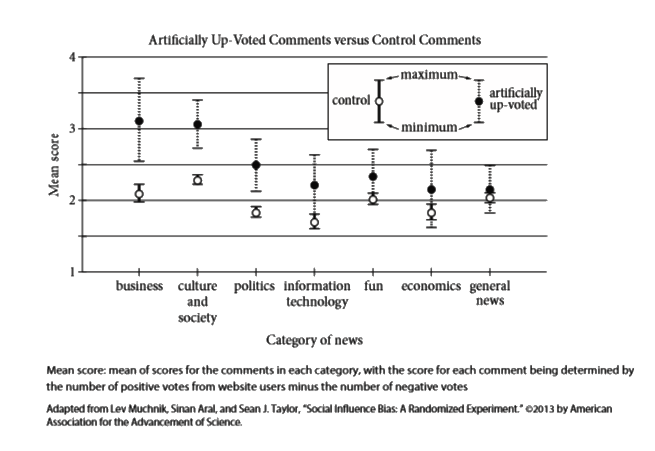

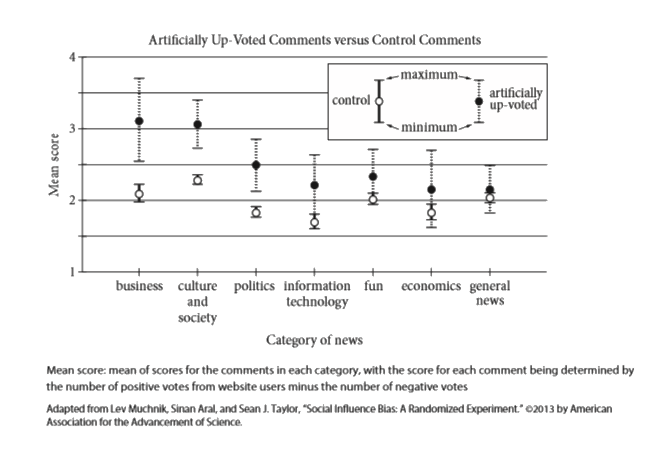

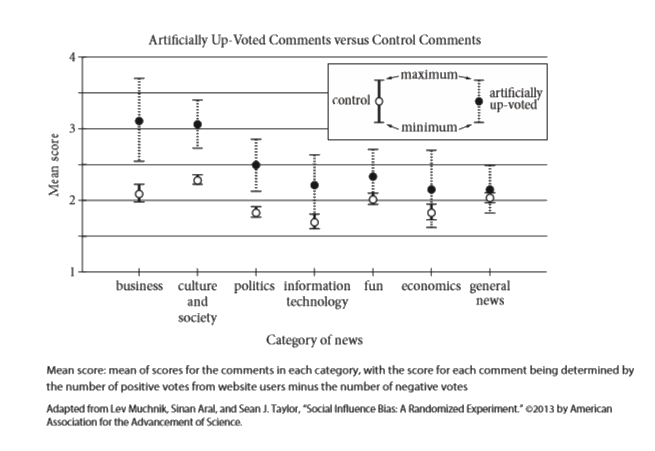

The 'wisdom of crowds" has become a mantra of the Internet age. Need to choose a new vacuum cleaner? Check out the reviews on online merchant Line Amazon. But a new study suggests that such online s scores don't always reveal the best choice. A massive controlled experiment of Web users finds that such ratings are highly susceptible to irrational "herd behavior' —and that the herd can be manipulated. Sometimes the crowd really is wiser than you. The to classic examples are guessing the weight of a bull or the number of gumballs in a jar. Your guess is probably going to be far from the mark, whereas the average of many people's choices is remarkably close to the true number. 15 But what happens when the goal is to judge something less tangible, such as the quality or worth of a product? According to one the, the wisdom of the crowd still holds—measuring ihe aggregate of people's opinions produces a stable, reliable 20 value, Skeptics, however, argue that people's opinions are easily swayed by those of others. So nudging a crowd early on by presenting contrary opinions—for example, exposing them to some very good or very bad attitudes—will steer the crowd in a 15 different direction. To test which hypothesis is true, you would need to manipulate huge numbers of people, exposing them to false information and determining how it affects their opinions. A team led b-y Sinan Aral, a network scientist at 30 the Massachusetts institute .of Technology in Cambridge, did exactly t lee Aral has been secretly . working with a popular website that aggregates news stories. The website allows users to make comments about news stories and vote each other's comments 55 up or down. The vote tallies ale visible as a number next to each comment , and the position of the comments is chronological. (Stories on the site get an average of about ten comments and about three votes per comment.)It’s a follow-up to his experiment using people’sratings of movies to measure how much individual people influence each other online (answer:alot).This time,he wanted to know how much the crowd influences the individual,and whether it can be controlled from outside.

For five months,every comment submitted by a user randomly received an“up”vote(positive),a “down”vote(negative),or as a control,no vote at all The team then observes! how users rated those comments. The users generated more than so 100,000 comments that were viewed more than 10 million times and rated more than 300,000 times by other users.

At least when it comes to comments on news sites, the crowd is more herdlike than wise. 55 Comments that received fake positive votes from the researchers were 32% more Likely to receive more positive votes compared with a control, the team reports. And those comments were no more likely than the control to be down-voted by the next viewer 60 to see them. By the end of the study, positively manipulated comments got an overall boost of about 25%. However, the same did not hold true for negative manipulation. The ratings of comments that got a fake down vote were usually negated by an up vote by the next user to see them. "Our experiment does not reveal the psychology behind people's decisions," Aral says, "but an intuitive explanation is that people are more skeptical of negative social influence. They're more 70 willing to go along with positive opinions from other people." - • -Duncan Watts, a network scientist at Microsoft Research in New York City, agrees with that conclusion. But] one question is whether the 75 positive 'herding] bias is specific to this site" or true in general, Watts says. lie points out that the category of the news items in the experiment had a strong effect on how much people could be manipulated. "I would have thought that 'business' is so pretty similar to 'economics,' yet they find a much stronger effect (almost 50% stronger) for the former • than the latter. What explains this difference? If we're going to apply these findings in the real world, we'll need to know the answers.Will companies be able to boost their products by manipulating online ratings on a massive scale? “That is easier said than done,”Wattssays.If people detect—or learn—that comments on a website are being manipulated,the herd may spook and leave entirely.

According to the figure which category of news showed the smallest difference in mean score between artifically up-voted comments and control comments?

View SolutionThe 'wisdom of crowds" has become a mantra of the Internet age. Need to choose a new vacuum cleaner? Check out the reviews on online merchant Line Amazon. But a new study suggests that such online s scores don't always reveal the best choice. A massive controlled experiment of Web users finds that such ratings are highly susceptible to irrational "herd behavior' —and that the herd can be manipulated. Sometimes the crowd really is wiser than you. The to classic examples are guessing the weight of a bull or the number of gumballs in a jar. Your guess is probably going to be far from the mark, whereas the average of many people's choices is remarkably close to the true number. 15 But what happens when the goal is to judge something less tangible, such as the quality or worth of a product? According to one the, the wisdom of the crowd still holds—measuring ihe aggregate of people's opinions produces a stable, reliable 20 value, Skeptics, however, argue that people's opinions are easily swayed by those of others. So nudging a crowd early on by presenting contrary opinions—for example, exposing them to some very good or very bad attitudes—will steer the crowd in a 15 different direction. To test which hypothesis is true, you would need to manipulate huge numbers of people, exposing them to false information and determining how it affects their opinions. A team led b-y Sinan Aral, a network scientist at 30 the Massachusetts institute .of Technology in Cambridge, did exactly t lee Aral has been secretly . working with a popular website that aggregates news stories. The website allows users to make comments about news stories and vote each other's comments 55 up or down. The vote tallies ale visible as a number next to each comment , and the position of the comments is chronological. (Stories on the site get an average of about ten comments and about three votes per comment.)It’s a follow-up to his experiment using people’sratings of movies to measure how much individual people influence each other online (answer:alot).This time,he wanted to know how much the crowd influences the individual,and whether it can be controlled from outside.

For five months,every comment submitted by a user randomly received an“up”vote(positive),a “down”vote(negative),or as a control,no vote at all The team then observes! how users rated those comments. The users generated more than so 100,000 comments that were viewed more than 10 million times and rated more than 300,000 times by other users.

At least when it comes to comments on news sites, the crowd is more herdlike than wise. 55 Comments that received fake positive votes from the researchers were 32% more Likely to receive more positive votes compared with a control, the team reports. And those comments were no more likely than the control to be down-voted by the next viewer 60 to see them. By the end of the study, positively manipulated comments got an overall boost of about 25%. However, the same did not hold true for negative manipulation. The ratings of comments that got a fake down vote were usually negated by an up vote by the next user to see them. "Our experiment does not reveal the psychology behind people's decisions," Aral says, "but an intuitive explanation is that people are more skeptical of negative social influence. They're more 70 willing to go along with positive opinions from other people." - • -Duncan Watts, a network scientist at Microsoft Research in New York City, agrees with that conclusion. But] one question is whether the 75 positive 'herding] bias is specific to this site" or true in general, Watts says. lie points out that the category of the news items in the experiment had a strong effect on how much people could be manipulated. "I would have thought that 'business' is so pretty similar to 'economics,' yet they find a much stronger effect (almost 50% stronger) for the former • than the latter. What explains this difference? If we're going to apply these findings in the real world, we'll need to know the answers.Will companies be able to boost their products by manipulating online ratings on a massive scale? “That is easier said than done,”Wattssays.If people detect—or learn—that comments on a website are being manipulated,the herd may spook and leave entirely.

Over the course of the passage, the main focus shifts from a discussion of an experiment and its results to

View Solution

Knowledge Check

The cost of a gym membership is a onetime fee of $140, plus a monthly fee of $ 40. Brendan wrote a $500 check to pay his gym membership for a certain number of months, including the onetime fee. How many months of membership did he pay for?

A3B4C9D12Merry joined an online community that charges a monthly fee of $15. A one-time enrollment fee of $50 was charged when she joined. Which of the following represents the total amount of fee that merry has paid to the community organizers after m months, in dollars?

A15m+50B15+50mC15m-50D(15+50)mA laser tag arean sells two types of memberships. One package costs $325 for one year of membership with an unilimated number of visits. The second package has a $125 enrollment fee, includes five free visits, and costs and additional $8 per visit after the first five. How many visits over a one-year period would a person who purchese the second package need to use for teh cost to equal that of the one-year membership ?

A20B25C30D40

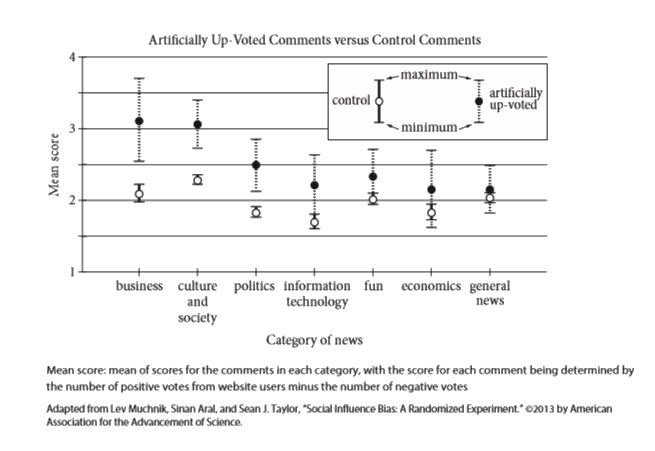

The 'wisdom of crowds" has become a mantra of the Internet age. Need to choose a new vacuum cleaner? Check out the reviews on online merchant Line Amazon. But a new study suggests that such online s scores don't always reveal the best choice. A massive controlled experiment of Web users finds that such ratings are highly susceptible to irrational "herd behavior' —and that the herd can be manipulated. Sometimes the crowd really is wiser than you. The to classic examples are guessing the weight of a bull or the number of gumballs in a jar. Your guess is probably going to be far from the mark, whereas the average of many people's choices is remarkably close to the true number. 15 But what happens when the goal is to judge something less tangible, such as the quality or worth of a product? According to one the, the wisdom of the crowd still holds—measuring ihe aggregate of people's opinions produces a stable, reliable 20 value, Skeptics, however, argue that people's opinions are easily swayed by those of others. So nudging a crowd early on by presenting contrary opinions—for example, exposing them to some very good or very bad attitudes—will steer the crowd in a 15 different direction. To test which hypothesis is true, you would need to manipulate huge numbers of people, exposing them to false information and determining how it affects their opinions. A team led b-y Sinan Aral, a network scientist at 30 the Massachusetts institute .of Technology in Cambridge, did exactly t lee Aral has been secretly . working with a popular website that aggregates news stories. The website allows users to make comments about news stories and vote each other's comments 55 up or down. The vote tallies ale visible as a number next to each comment , and the position of the comments is chronological. (Stories on the site get an average of about ten comments and about three votes per comment.)It’s a follow-up to his experiment using people’sratings of movies to measure how much individual people influence each other online (answer:alot).This time,he wanted to know how much the crowd influences the individual,and whether it can be controlled from outside.

For five months,every comment submitted by a user randomly received an“up”vote(positive),a “down”vote(negative),or as a control,no vote at all The team then observes! how users rated those comments. The users generated more than so 100,000 comments that were viewed more than 10 million times and rated more than 300,000 times by other users.

At least when it comes to comments on news sites, the crowd is more herdlike than wise. 55 Comments that received fake positive votes from the researchers were 32% more Likely to receive more positive votes compared with a control, the team reports. And those comments were no more likely than the control to be down-voted by the next viewer 60 to see them. By the end of the study, positively manipulated comments got an overall boost of about 25%. However, the same did not hold true for negative manipulation. The ratings of comments that got a fake down vote were usually negated by an up vote by the next user to see them. "Our experiment does not reveal the psychology behind people's decisions," Aral says, "but an intuitive explanation is that people are more skeptical of negative social influence. They're more 70 willing to go along with positive opinions from other people." - • -Duncan Watts, a network scientist at Microsoft Research in New York City, agrees with that conclusion. But] one question is whether the 75 positive 'herding] bias is specific to this site" or true in general, Watts says. lie points out that the category of the news items in the experiment had a strong effect on how much people could be manipulated. "I would have thought that 'business' is so pretty similar to 'economics,' yet they find a much stronger effect (almost 50% stronger) for the former • than the latter. What explains this difference? If we're going to apply these findings in the real world, we'll need to know the answers.Will companies be able to boost their products by manipulating online ratings on a massive scale? “That is easier said than done,”Wattssays.If people detect—or learn—that comments on a website are being manipulated,the herd may spook and leave entirely.

As used in line 85,"boost"most nearly means

View SolutionThe 'wisdom of crowds" has become a mantra of the Internet age. Need to choose a new vacuum cleaner? Check out the reviews on online merchant Line Amazon. But a new study suggests that such online s scores don't always reveal the best choice. A massive controlled experiment of Web users finds that such ratings are highly susceptible to irrational "herd behavior' —and that the herd can be manipulated. Sometimes the crowd really is wiser than you. The to classic examples are guessing the weight of a bull or the number of gumballs in a jar. Your guess is probably going to be far from the mark, whereas the average of many people's choices is remarkably close to the true number. 15 But what happens when the goal is to judge something less tangible, such as the quality or worth of a product? According to one the, the wisdom of the crowd still holds—measuring ihe aggregate of people's opinions produces a stable, reliable 20 value, Skeptics, however, argue that people's opinions are easily swayed by those of others. So nudging a crowd early on by presenting contrary opinions—for example, exposing them to some very good or very bad attitudes—will steer the crowd in a 15 different direction. To test which hypothesis is true, you would need to manipulate huge numbers of people, exposing them to false information and determining how it affects their opinions. A team led b-y Sinan Aral, a network scientist at 30 the Massachusetts institute .of Technology in Cambridge, did exactly t lee Aral has been secretly . working with a popular website that aggregates news stories. The website allows users to make comments about news stories and vote each other's comments 55 up or down. The vote tallies ale visible as a number next to each comment , and the position of the comments is chronological. (Stories on the site get an average of about ten comments and about three votes per comment.)It’s a follow-up to his experiment using people’sratings of movies to measure how much individual people influence each other online (answer:alot).This time,he wanted to know how much the crowd influences the individual,and whether it can be controlled from outside.

For five months,every comment submitted by a user randomly received an“up”vote(positive),a “down”vote(negative),or as a control,no vote at all The team then observes! how users rated those comments. The users generated more than so 100,000 comments that were viewed more than 10 million times and rated more than 300,000 times by other users.

At least when it comes to comments on news sites, the crowd is more herdlike than wise. 55 Comments that received fake positive votes from the researchers were 32% more Likely to receive more positive votes compared with a control, the team reports. And those comments were no more likely than the control to be down-voted by the next viewer 60 to see them. By the end of the study, positively manipulated comments got an overall boost of about 25%. However, the same did not hold true for negative manipulation. The ratings of comments that got a fake down vote were usually negated by an up vote by the next user to see them. "Our experiment does not reveal the psychology behind people's decisions," Aral says, "but an intuitive explanation is that people are more skeptical of negative social influence. They're more 70 willing to go along with positive opinions from other people." - • -Duncan Watts, a network scientist at Microsoft Research in New York City, agrees with that conclusion. But] one question is whether the 75 positive 'herding] bias is specific to this site" or true in general, Watts says. lie points out that the category of the news items in the experiment had a strong effect on how much people could be manipulated. "I would have thought that 'business' is so pretty similar to 'economics,' yet they find a much stronger effect (almost 50% stronger) for the former • than the latter. What explains this difference? If we're going to apply these findings in the real world, we'll need to know the answers.Will companies be able to boost their products by manipulating online ratings on a massive scale? “That is easier said than done,”Wattssays.If people detect—or learn—that comments on a website are being manipulated,the herd may spook and leave entirely.

Which action would best address a question watts raises about the study?

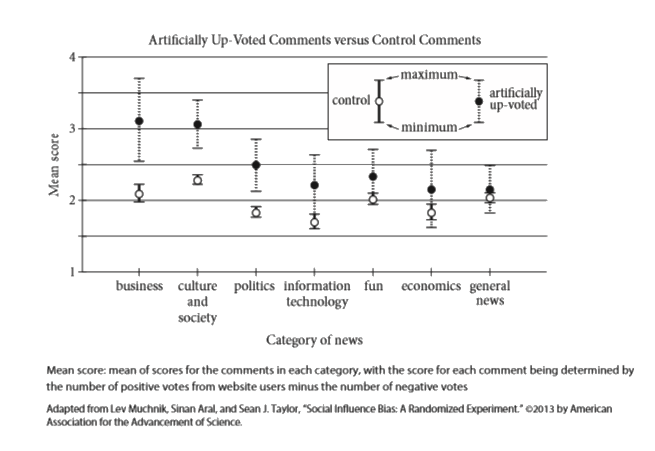

View SolutionThe 'wisdom of crowds" has become a mantra of the Internet age. Need to choose a new vacuum cleaner? Check out the reviews on online merchant Line Amazon. But a new study suggests that such online s scores don't always reveal the best choice. A massive controlled experiment of Web users finds that such ratings are highly susceptible to irrational "herd behavior' —and that the herd can be manipulated. Sometimes the crowd really is wiser than you. The to classic examples are guessing the weight of a bull or the number of gumballs in a jar. Your guess is probably going to be far from the mark, whereas the average of many people's choices is remarkably close to the true number. 15 But what happens when the goal is to judge something less tangible, such as the quality or worth of a product? According to one the, the wisdom of the crowd still holds—measuring ihe aggregate of people's opinions produces a stable, reliable 20 value, Skeptics, however, argue that people's opinions are easily swayed by those of others. So nudging a crowd early on by presenting contrary opinions—for example, exposing them to some very good or very bad attitudes—will steer the crowd in a 15 different direction. To test which hypothesis is true, you would need to manipulate huge numbers of people, exposing them to false information and determining how it affects their opinions. A team led b-y Sinan Aral, a network scientist at 30 the Massachusetts institute .of Technology in Cambridge, did exactly t lee Aral has been secretly . working with a popular website that aggregates news stories. The website allows users to make comments about news stories and vote each other's comments 55 up or down. The vote tallies ale visible as a number next to each comment , and the position of the comments is chronological. (Stories on the site get an average of about ten comments and about three votes per comment.)It’s a follow-up to his experiment using people’sratings of movies to measure how much individual people influence each other online (answer:alot).This time,he wanted to know how much the crowd influences the individual,and whether it can be controlled from outside.

For five months,every comment submitted by a user randomly received an“up”vote(positive),a “down”vote(negative),or as a control,no vote at all The team then observes! how users rated those comments. The users generated more than so 100,000 comments that were viewed more than 10 million times and rated more than 300,000 times by other users.

At least when it comes to comments on news sites, the crowd is more herdlike than wise. 55 Comments that received fake positive votes from the researchers were 32% more Likely to receive more positive votes compared with a control, the team reports. And those comments were no more likely than the control to be down-voted by the next viewer 60 to see them. By the end of the study, positively manipulated comments got an overall boost of about 25%. However, the same did not hold true for negative manipulation. The ratings of comments that got a fake down vote were usually negated by an up vote by the next user to see them. "Our experiment does not reveal the psychology behind people's decisions," Aral says, "but an intuitive explanation is that people are more skeptical of negative social influence. They're more 70 willing to go along with positive opinions from other people." - • -Duncan Watts, a network scientist at Microsoft Research in New York City, agrees with that conclusion. But] one question is whether the 75 positive 'herding] bias is specific to this site" or true in general, Watts says. lie points out that the category of the news items in the experiment had a strong effect on how much people could be manipulated. "I would have thought that 'business' is so pretty similar to 'economics,' yet they find a much stronger effect (almost 50% stronger) for the former • than the latter. What explains this difference? If we're going to apply these findings in the real world, we'll need to know the answers.Will companies be able to boost their products by manipulating online ratings on a massive scale? “That is easier said than done,”Wattssays.If people detect—or learn—that comments on a website are being manipulated,the herd may spook and leave entirely.

In the figure, which category of news have an artifically up-voted mean square of 2.5?

View SolutionThe 'wisdom of crowds" has become a mantra of the Internet age. Need to choose a new vacuum cleaner? Check out the reviews on online merchant Line Amazon. But a new study suggests that such online s scores don't always reveal the best choice. A massive controlled experiment of Web users finds that such ratings are highly susceptible to irrational "herd behavior' —and that the herd can be manipulated. Sometimes the crowd really is wiser than you. The to classic examples are guessing the weight of a bull or the number of gumballs in a jar. Your guess is probably going to be far from the mark, whereas the average of many people's choices is remarkably close to the true number. 15 But what happens when the goal is to judge something less tangible, such as the quality or worth of a product? According to one the, the wisdom of the crowd still holds—measuring ihe aggregate of people's opinions produces a stable, reliable 20 value, Skeptics, however, argue that people's opinions are easily swayed by those of others. So nudging a crowd early on by presenting contrary opinions—for example, exposing them to some very good or very bad attitudes—will steer the crowd in a 15 different direction. To test which hypothesis is true, you would need to manipulate huge numbers of people, exposing them to false information and determining how it affects their opinions. A team led b-y Sinan Aral, a network scientist at 30 the Massachusetts institute .of Technology in Cambridge, did exactly t lee Aral has been secretly . working with a popular website that aggregates news stories. The website allows users to make comments about news stories and vote each other's comments 55 up or down. The vote tallies ale visible as a number next to each comment , and the position of the comments is chronological. (Stories on the site get an average of about ten comments and about three votes per comment.)It’s a follow-up to his experiment using people’sratings of movies to measure how much individual people influence each other online (answer:alot).This time,he wanted to know how much the crowd influences the individual,and whether it can be controlled from outside.

For five months,every comment submitted by a user randomly received an“up”vote(positive),a “down”vote(negative),or as a control,no vote at all The team then observes! how users rated those comments. The users generated more than so 100,000 comments that were viewed more than 10 million times and rated more than 300,000 times by other users.

At least when it comes to comments on news sites, the crowd is more herdlike than wise. 55 Comments that received fake positive votes from the researchers were 32% more Likely to receive more positive votes compared with a control, the team reports. And those comments were no more likely than the control to be down-voted by the next viewer 60 to see them. By the end of the study, positively manipulated comments got an overall boost of about 25%. However, the same did not hold true for negative manipulation. The ratings of comments that got a fake down vote were usually negated by an up vote by the next user to see them. "Our experiment does not reveal the psychology behind people's decisions," Aral says, "but an intuitive explanation is that people are more skeptical of negative social influence. They're more 70 willing to go along with positive opinions from other people." - • -Duncan Watts, a network scientist at Microsoft Research in New York City, agrees with that conclusion. But] one question is whether the 75 positive 'herding] bias is specific to this site" or true in general, Watts says. lie points out that the category of the news items in the experiment had a strong effect on how much people could be manipulated. "I would have thought that 'business' is so pretty similar to 'economics,' yet they find a much stronger effect (almost 50% stronger) for the former • than the latter. What explains this difference? If we're going to apply these findings in the real world, we'll need to know the answers.Will companies be able to boost their products by manipulating online ratings on a massive scale? “That is easier said than done,”Wattssays.If people detect—or learn—that comments on a website are being manipulated,the herd may spook and leave entirely.

As used in line 86,"Scale" most nearly means

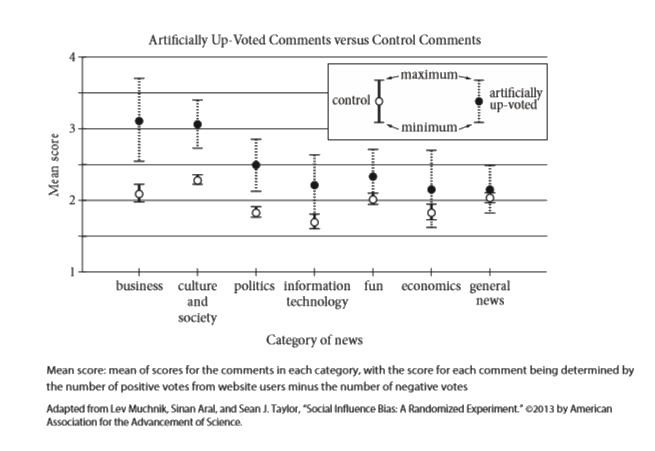

View SolutionThe 'wisdom of crowds" has become a mantra of the Internet age. Need to choose a new vacuum cleaner? Check out the reviews on online merchant Line Amazon. But a new study suggests that such online s scores don't always reveal the best choice. A massive controlled experiment of Web users finds that such ratings are highly susceptible to irrational "herd behavior' —and that the herd can be manipulated. Sometimes the crowd really is wiser than you. The to classic examples are guessing the weight of a bull or the number of gumballs in a jar. Your guess is probably going to be far from the mark, whereas the average of many people's choices is remarkably close to the true number. 15 But what happens when the goal is to judge something less tangible, such as the quality or worth of a product? According to one the, the wisdom of the crowd still holds—measuring ihe aggregate of people's opinions produces a stable, reliable 20 value, Skeptics, however, argue that people's opinions are easily swayed by those of others. So nudging a crowd early on by presenting contrary opinions—for example, exposing them to some very good or very bad attitudes—will steer the crowd in a 15 different direction. To test which hypothesis is true, you would need to manipulate huge numbers of people, exposing them to false information and determining how it affects their opinions. A team led b-y Sinan Aral, a network scientist at 30 the Massachusetts institute .of Technology in Cambridge, did exactly t lee Aral has been secretly . working with a popular website that aggregates news stories. The website allows users to make comments about news stories and vote each other's comments 55 up or down. The vote tallies ale visible as a number next to each comment , and the position of the comments is chronological. (Stories on the site get an average of about ten comments and about three votes per comment.)It’s a follow-up to his experiment using people’sratings of movies to measure how much individual people influence each other online (answer:alot).This time,he wanted to know how much the crowd influences the individual,and whether it can be controlled from outside.

For five months,every comment submitted by a user randomly received an“up”vote(positive),a “down”vote(negative),or as a control,no vote at all The team then observes! how users rated those comments. The users generated more than so 100,000 comments that were viewed more than 10 million times and rated more than 300,000 times by other users.

At least when it comes to comments on news sites, the crowd is more herdlike than wise. 55 Comments that received fake positive votes from the researchers were 32% more Likely to receive more positive votes compared with a control, the team reports. And those comments were no more likely than the control to be down-voted by the next viewer 60 to see them. By the end of the study, positively manipulated comments got an overall boost of about 25%. However, the same did not hold true for negative manipulation. The ratings of comments that got a fake down vote were usually negated by an up vote by the next user to see them. "Our experiment does not reveal the psychology behind people's decisions," Aral says, "but an intuitive explanation is that people are more skeptical of negative social influence. They're more 70 willing to go along with positive opinions from other people." - • -Duncan Watts, a network scientist at Microsoft Research in New York City, agrees with that conclusion. But] one question is whether the 75 positive 'herding] bias is specific to this site" or true in general, Watts says. lie points out that the category of the news items in the experiment had a strong effect on how much people could be manipulated. "I would have thought that 'business' is so pretty similar to 'economics,' yet they find a much stronger effect (almost 50% stronger) for the former • than the latter. What explains this difference? If we're going to apply these findings in the real world, we'll need to know the answers.Will companies be able to boost their products by manipulating online ratings on a massive scale? “That is easier said than done,”Wattssays.If people detect—or learn—that comments on a website are being manipulated,the herd may spook and leave entirely.

Which choice best supports the view of the "skeptics" (line 20)?

View Solution